OIMNet++: Prototypical Normalization

and Localization-aware Learning for Person Search

and Localization-aware Learning for Person Search

ECCV 2022

|

S. Lee, Y. Oh, D. Baek, J. Lee, B. Ham OIMNet++: Prototypical Normalization and Localization-aware Learning for Person Search In Proceedings of European Conference on Computer Vision (ECCV), 2022 [ArXiv][Code] |

|

|

|

|

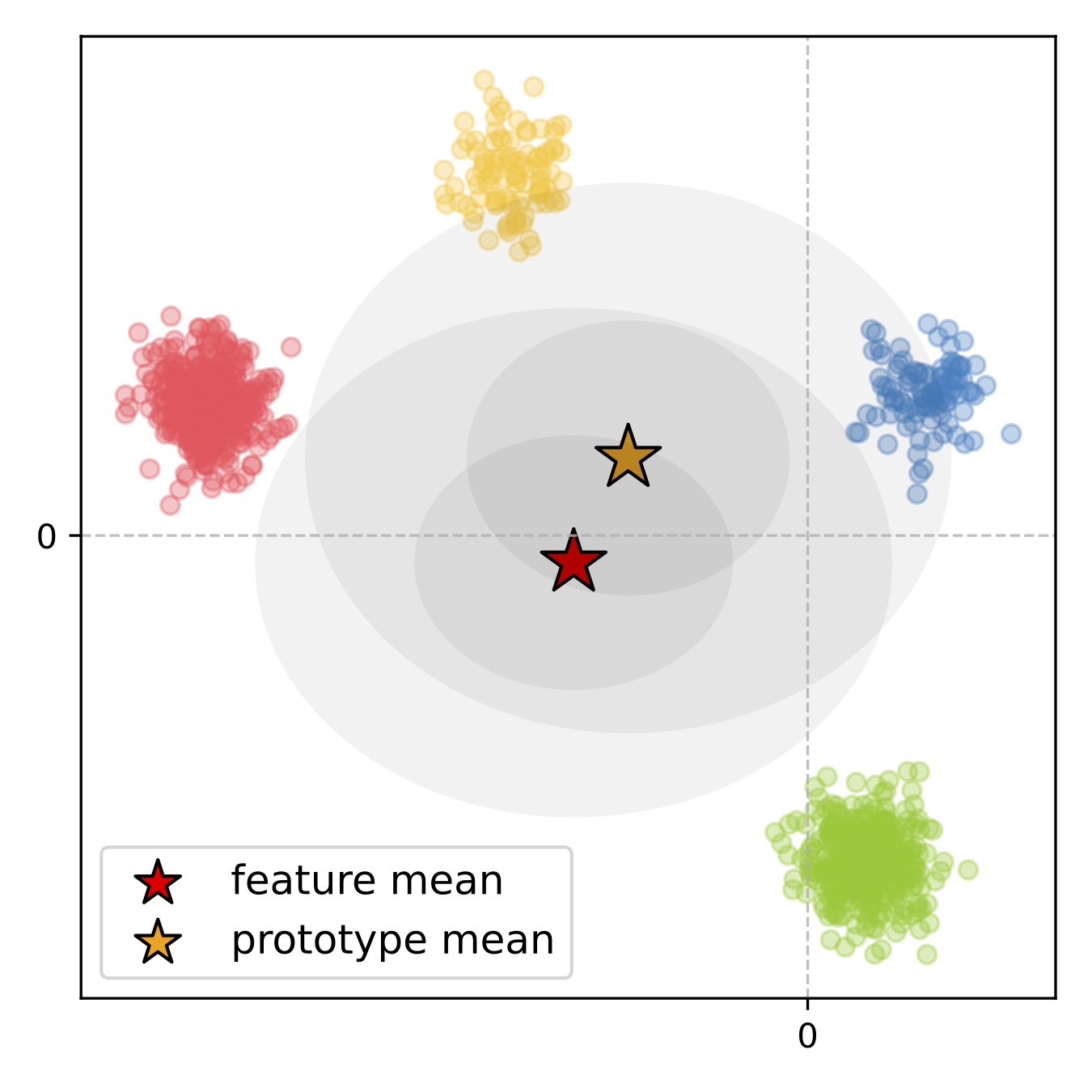

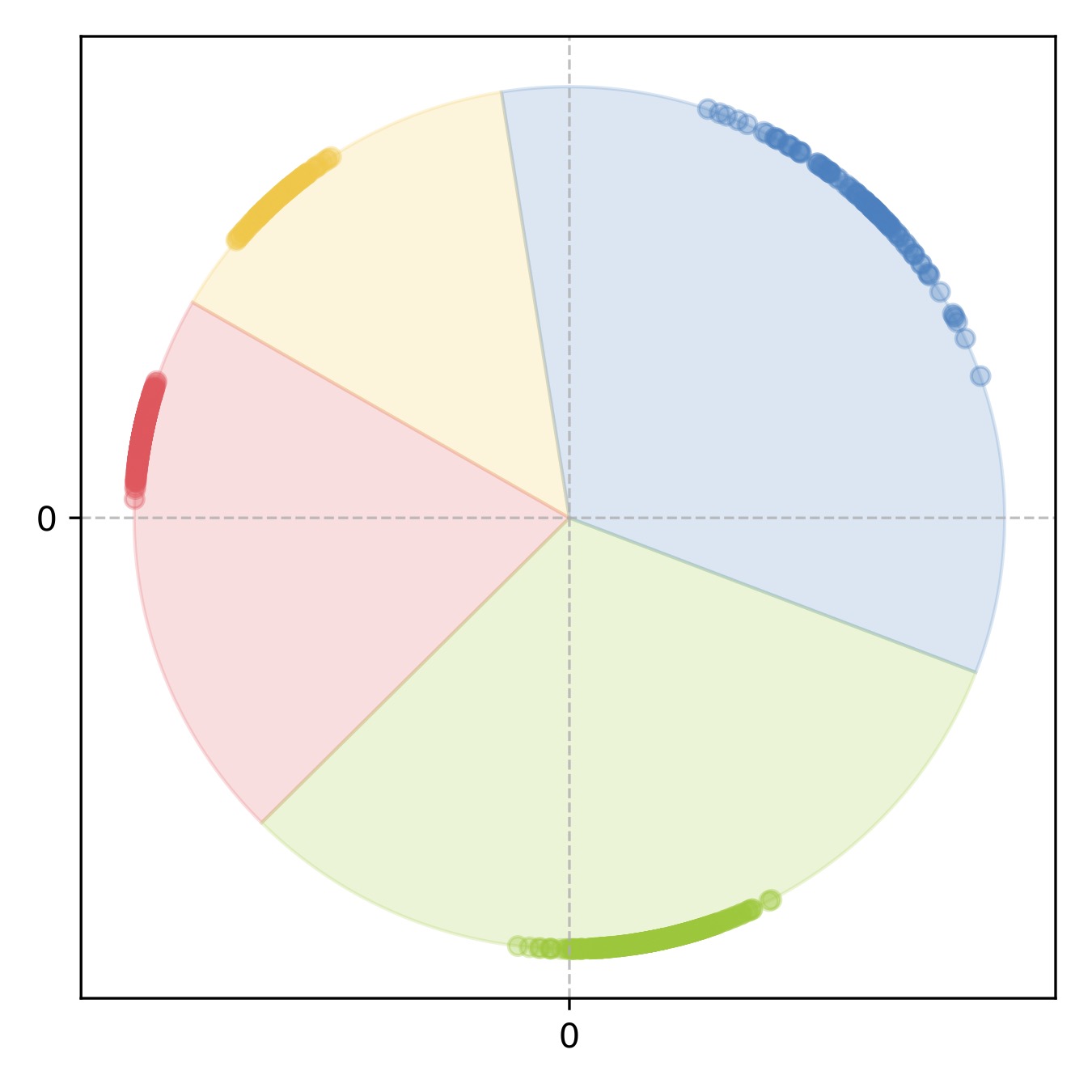

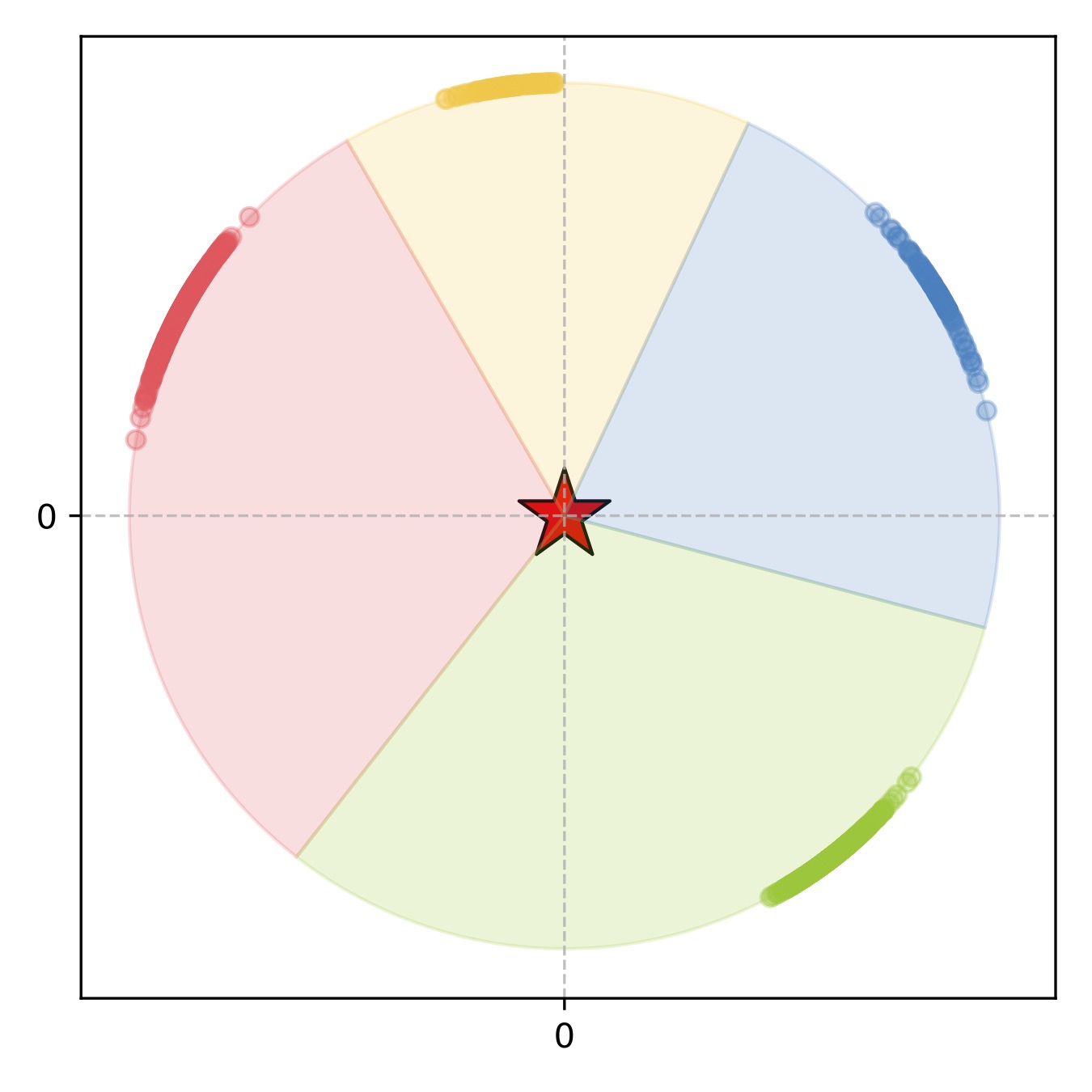

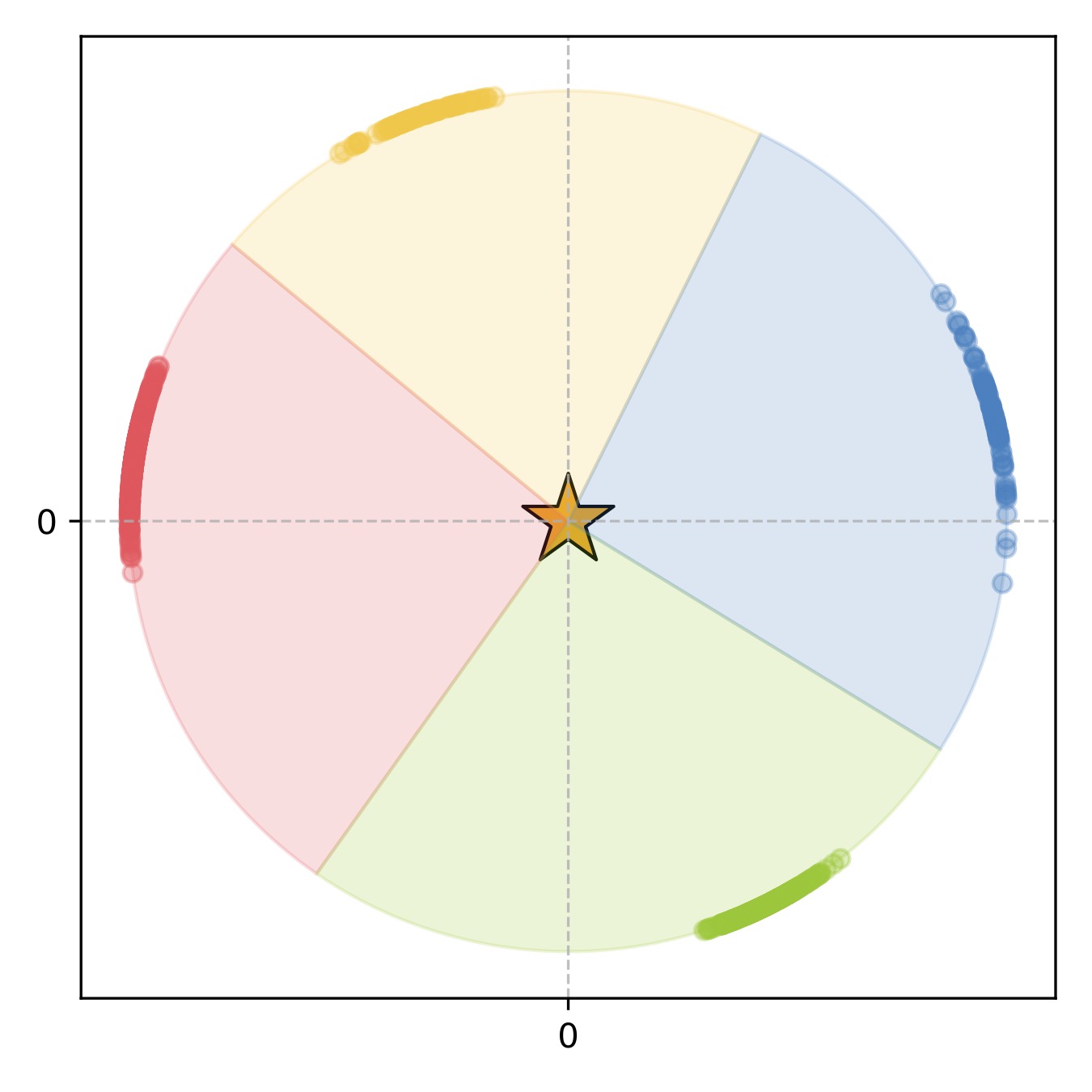

We visualize in (a) synthetic 2D features in circles, where each color represents an ID label. We represent mean obtained from input features and ID prototypes with stars colored in red and yellow, respectively. Note that pink and green features are sampled 4X more. The features are clearly not zero-centered with unit variance. In this case, simply applying L2 normalization degenerates the discriminative power, as shown in (b), where background colors indicate decision boundaries. Adopting a feature standardization with feature mean and variance, i.e., in a BatchNorm-fashion, prior to L2 normalization, alleviates this problem in (c). However, this does not consider a sample distribution across IDs to calibrate the feature distribution. The distribution is thus biased towards majority IDs, which weakens the inter-class separability. Instead, calibrating feature distribution using ID prototypes with ProtoNorm provides highly discriminative L2-normalized features in (d), where each ID is assigned similar angular space.

Abstract

We address the task of person search, that is, localizing and re-identifying query persons from a set of raw scene images. Recent approaches are typically built upon OIMNet, a pioneer work on person search, that learns joint person representations for performing both detection and person re-identification (reID) tasks. To obtain the representations, they extract features from pedestrian proposals, and then project them on a unit hypersphere with L2 normalization. These methods also incorporate all positive proposals, that sufficiently overlap with the ground truth, equally to learn person representations for reID. We have found that 1) the L2 normalization without considering feature distributions degenerates the discriminative power of person representations, and 2) positive proposals often also depict background clutter and person overlaps, which could encode noisy features to person representations. In this paper, we introduce OIMNet++ that addresses the aforementioned limitations. To this end, we introduce a novel normalization layer, dubbed ProtoNorm, that calibrates features from pedestrian proposals, while considering a long-tail distribution of person IDs, enabling L2 normalized person representations to be discriminative. We also propose a localization-aware feature learning scheme that encourages better-aligned proposals to contribute more in learning discriminative representations. Experimental results and analysis on standard person search benchmarks demonstrate the effectiveness of OIMNet++.

Approach

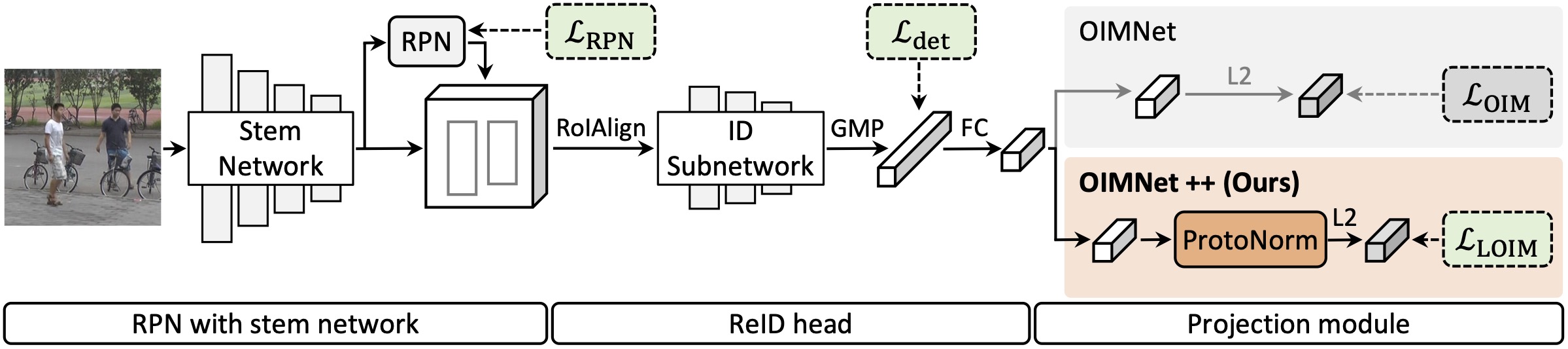

An overview of OIMNet++. Similar to OIMNet, OIMNet++ mainly consists of three parts: An RPN with a stem network, a reID head, and a projection module. The main differences between OIMNet++ (bottom) and OIMNet (top) are the projection module and the training loss. We incorporate a ProtoNorm layer to explicitly standardize features prior to L2 normalization, while considering the class imbalance problem in person search. We also exploit the LOIM loss that leverages localization accuracies of object proposals to learn discriminative features. See our paper for more details.

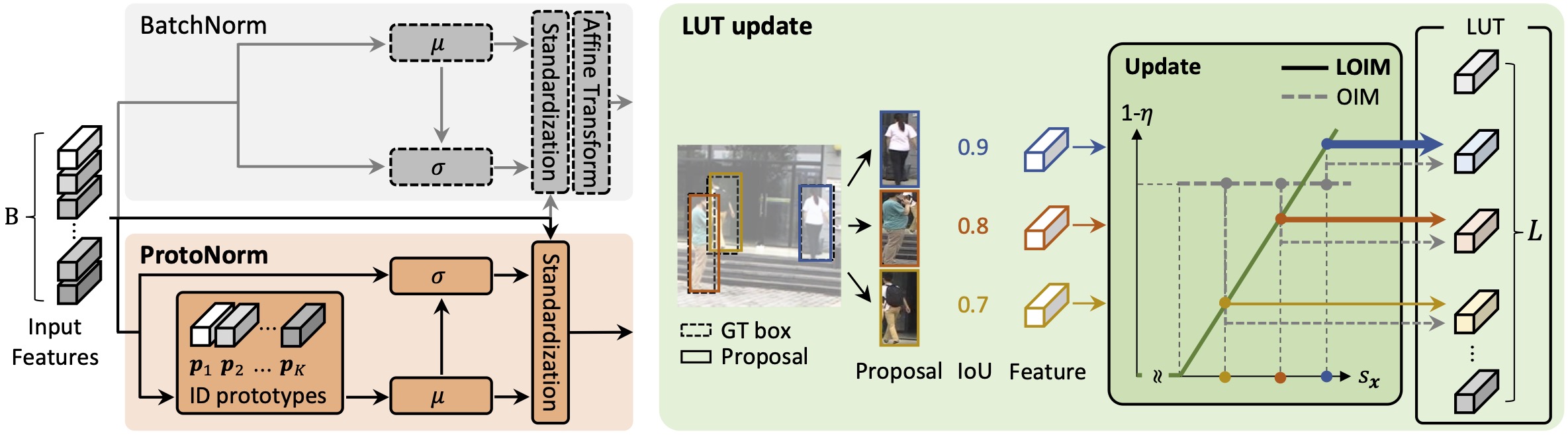

Left: A comparison between BatchNorm and ProtoNorm. BatchNorm computes feature statistics with input features directly. On the other hand, ProtoNorm aggregates multiple features with the same ID into a single prototype. ProtoNorm then computes mean and variance based on the prototype features, alleviating the bias towards dominant IDs. Right: LUT update scheme within the LOIM loss. The vanilla OIM loss assigns equal momentum values for all positive proposals, regardless of the localization qualities. The LOIM loss, instead, assigns an adaptive momentum value to each proposal w.r.t its IoU with the ground truth. Thicker arrows indicate larger degree of updates to the LUT. See our paper for more details.

Acknowledgements

This work was partly supported by Institute of Information & communications Technology Planning & Evaluation (IITP) grant funded by the Korea government (MSIT) (No.RS-2022-00143524, Development of Fundamental Technology and Integrated Solution for Next-Generation Automatic Artificial Intelligence System, and No.2021-0-02068, Artificial Intelligence Innovation Hub), the Yonsei Signature Research Cluster Program of 2022 (2022-22-0002), and the KIST Institutional Program (Project No.2E31051-21-203).