Learning by Aligning: Visible-Infrared Person Re-identification using Cross-Modal Correspondences

ICCV 2021

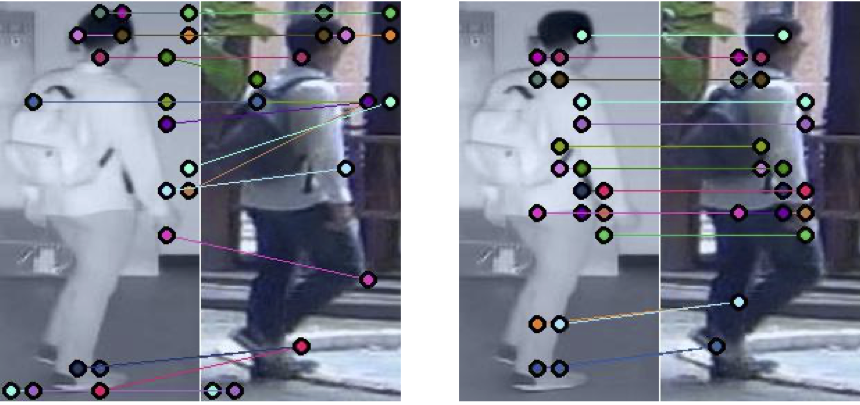

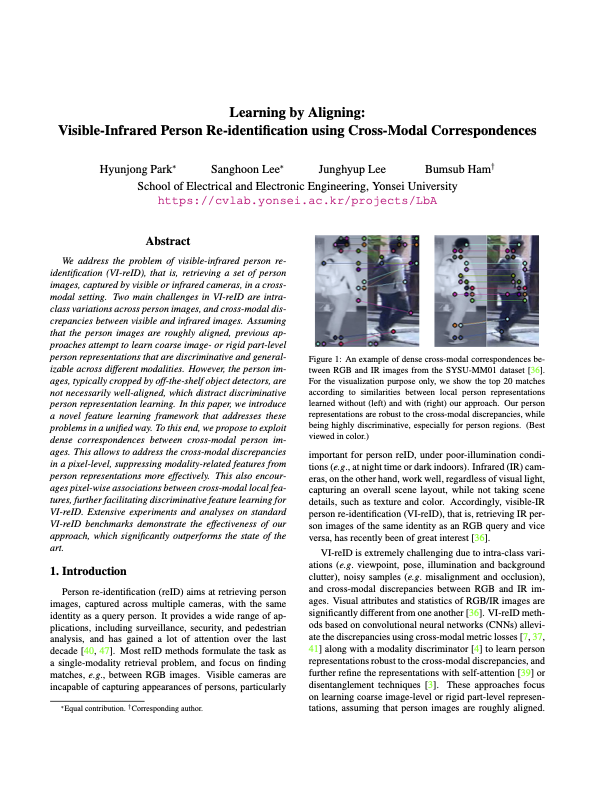

An example of dense cross-modal correspondences between RGB and IR person images. For the visualization purpose only, we show the top 20 matches according to similarities between local person representations learned without (left) and with (right) our approach. Our person representations are robust to the cross-modal discrepancies, while being highly discriminative, especially for person regions.

Abstract

We address the problem of visible-infrared person re-identification (VI-reID), that is, retrieving a set of person images, captured by visible or infrared cameras, in a cross-modal setting. Two main challenges in VI-reID are intra-class variations across person images, and cross-modal discrepancies between visible and infrared images. Assuming that the person images are roughly aligned, previous approaches attempt to learn coarse image- or rigid part-level person representations that are discriminative and generalizable across different modalities. However, the person images, typically cropped by off-the-shelf object detectors, are not necessarily well-aligned, which distract discriminative person representation learning. In this paper, we introduce a novel feature learning framework that addresses these problems in a unified way. To this end, we propose to exploit dense correspondences between cross-modal person images. This allows to address the cross-modal discrepancies in a pixel-level, suppressing modality-related features from person representations more effectively. This also encourages pixel-wise associations between cross-modal local features, further facilitating discriminative feature learning for VI-reID. Extensive experiments and analyses on standard VI-reID benchmarks demonstrate the effectiveness of our approach, which significantly outperforms the state of the art.

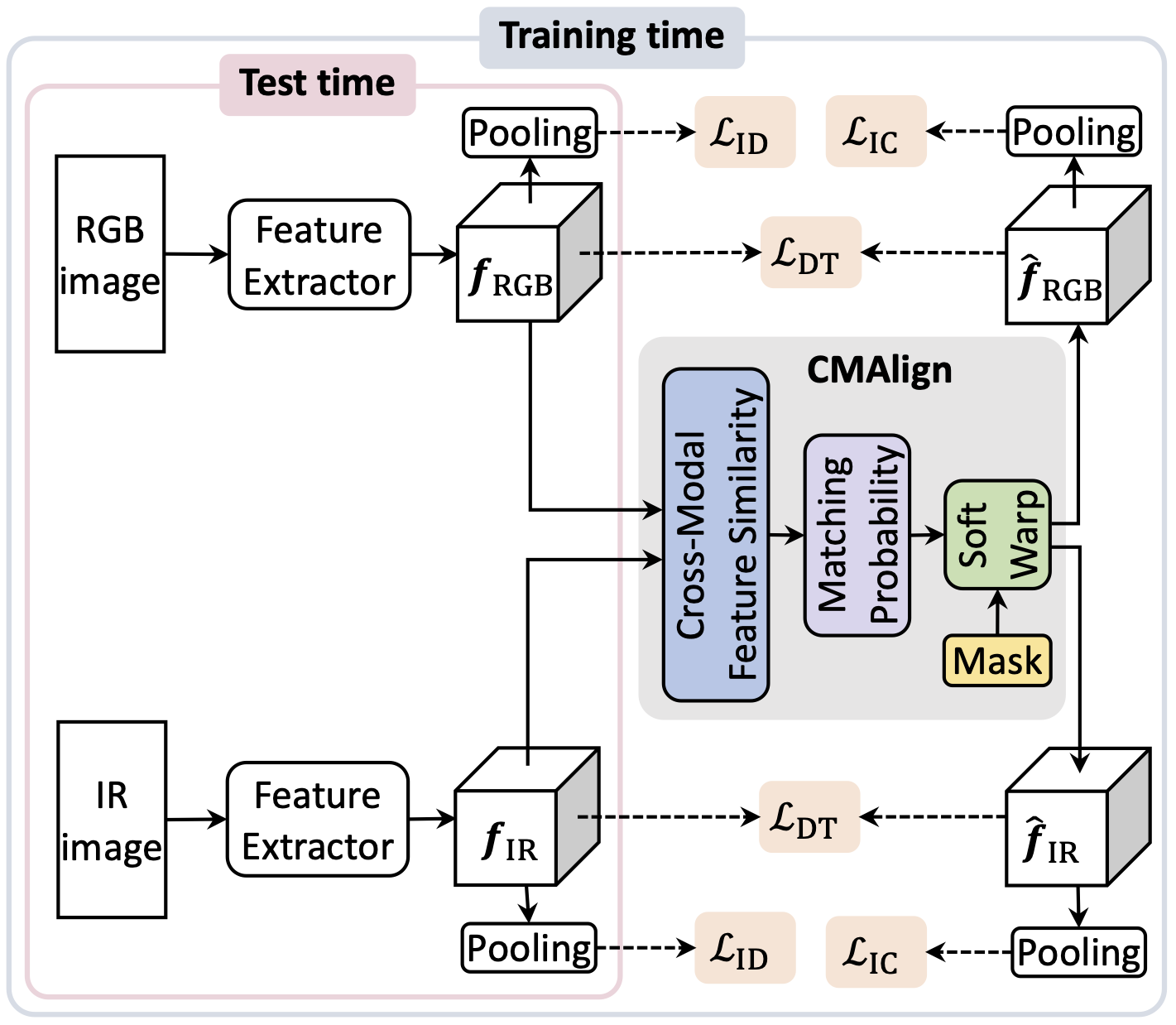

Approach

Overview of our framework for VI-reID. We extract RGB and IR features,

denoted by \( \mathbf{f}_{\mathrm{RGB}} \) and \( \mathbf{f}_{\mathrm{IR}} \), respectively, using a two-stream CNN.

The CMAlign module computes cross-modal feature similarities and matching probabilities between these features,

and aligns the cross-modal features w.r.t each other using soft warping,

together with parameter-free person masks to mitigate ambiguous matches between background regions.

We exploit both original RGB and IR features and aligned ones (\( \hat{\mathbf{f}}_{\mathrm{RGB}} \)

and \( \hat{\mathbf{f}}_{\mathrm{IR}} \)) during training,

and incorporate them into our objective function consisting of ID (\( \mathcal{L}_{\mathrm{ID}} \)),

ID consistency (\( \mathcal{L}_{\mathrm{IC}} \)) and dense triplet (\( \mathcal{L}_{\mathrm{DT}} \)) terms.

At test time, we compute cosine distances between person representations,

obtained by pooling RGB and IR features. See our paper for details.

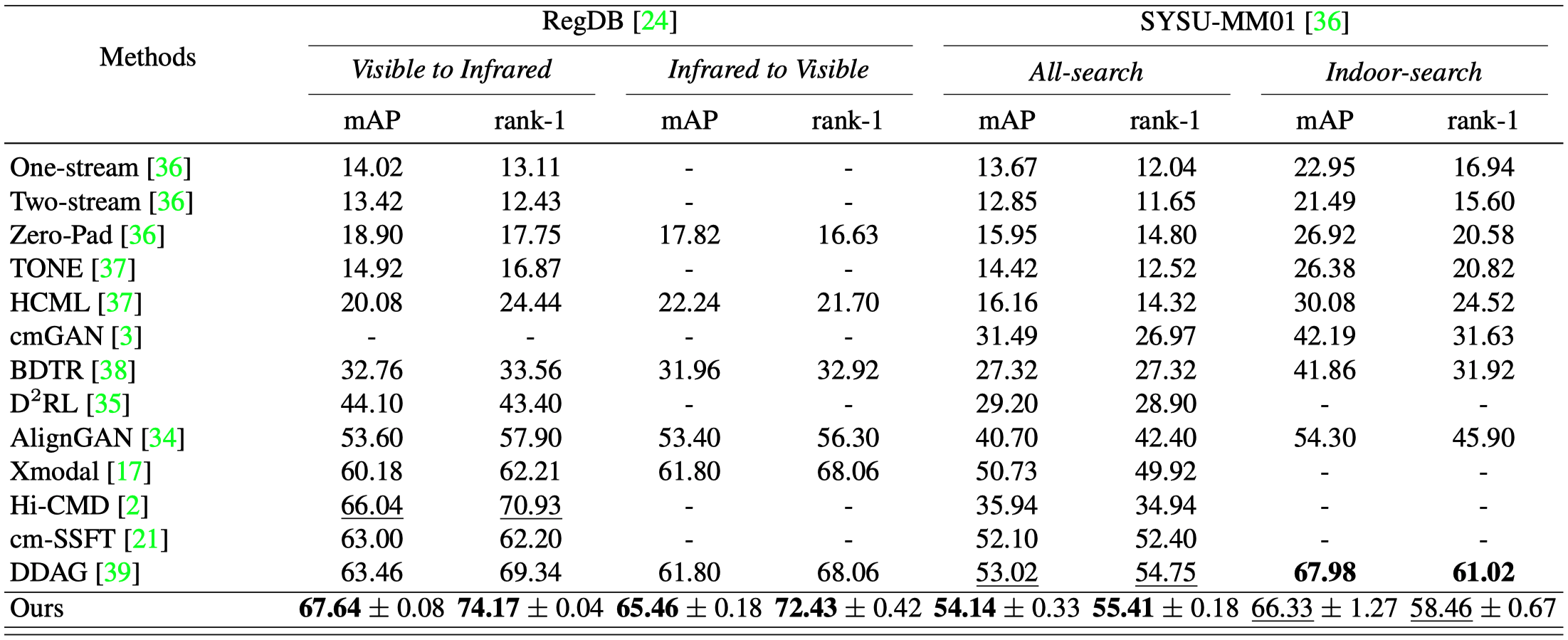

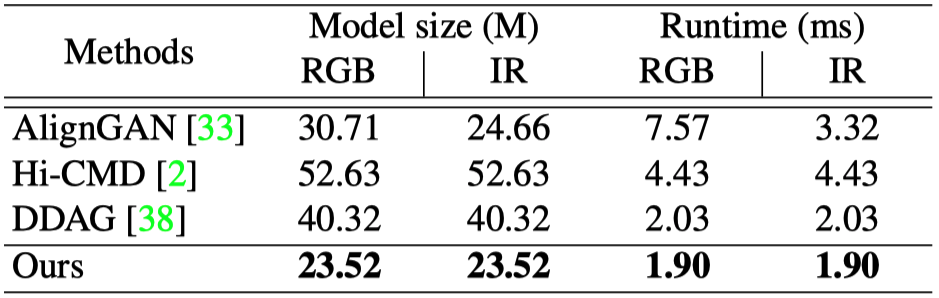

Experiment

Paper

|

H. Park, S. Lee, J. Lee, B. Ham Learning by Aligning: Visible-Infrared Person Re-identification using Cross-Modal Correspondences In IEEE/CVF International Conference on Computer Vision (ICCV) , 2021 [ArXiv] [Code] [Bibtex] |

Acknowledgements

This research was partly supported by R&D program for Advanced Integrated-intelligence for Identification (AIID) through the National Research Foundation of KOREA (NRF) funded by Ministry of Science and ICT (NRF2018M3E3A1057289), Institute for Information and Communications Technology Promotion (IITP) funded by the Korean Government (MSIP) under Grant 2016-0-00197, and Yonsei University Research Fund of 2021 (2021-22-0001).