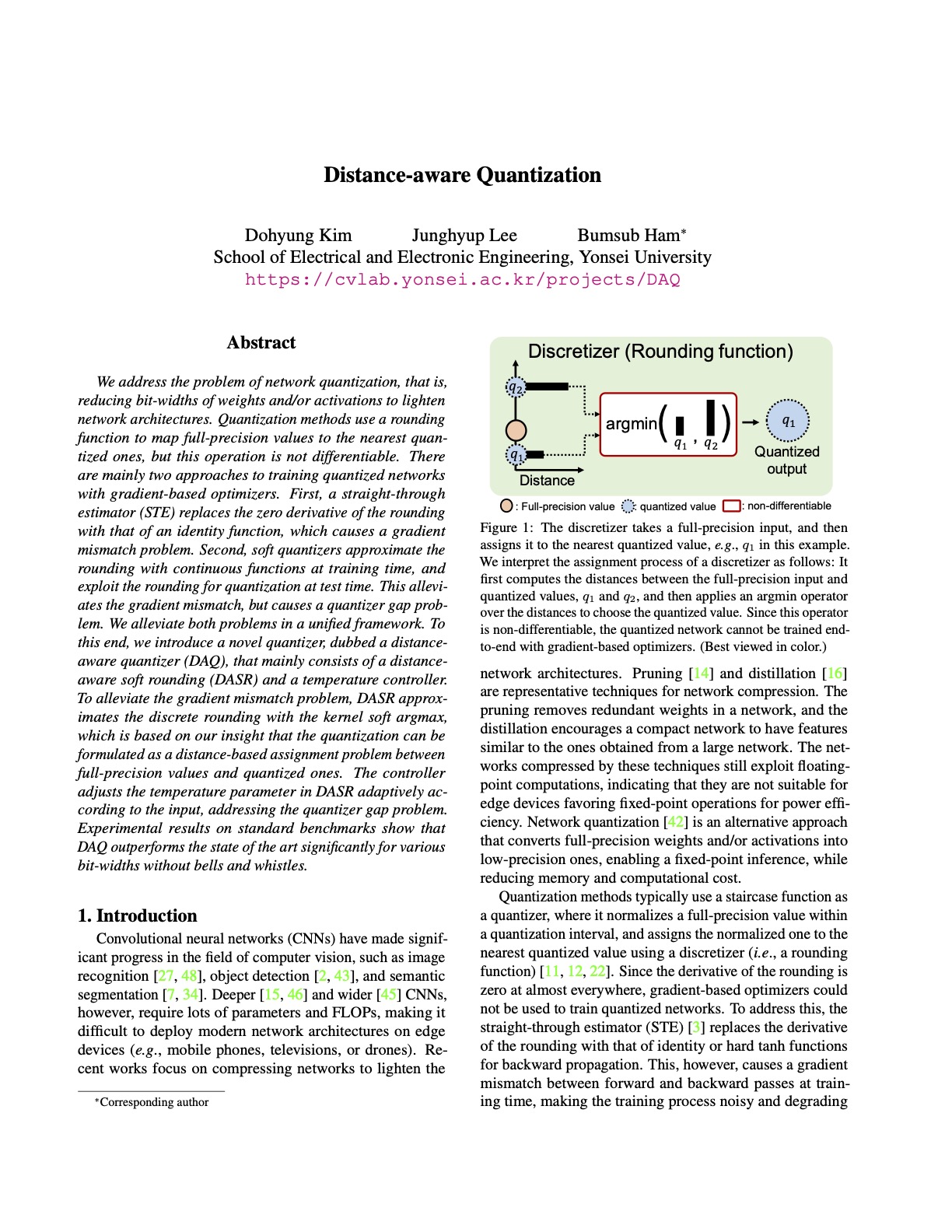

Distance-aware Quantization (ICCV 2021)

Abstract

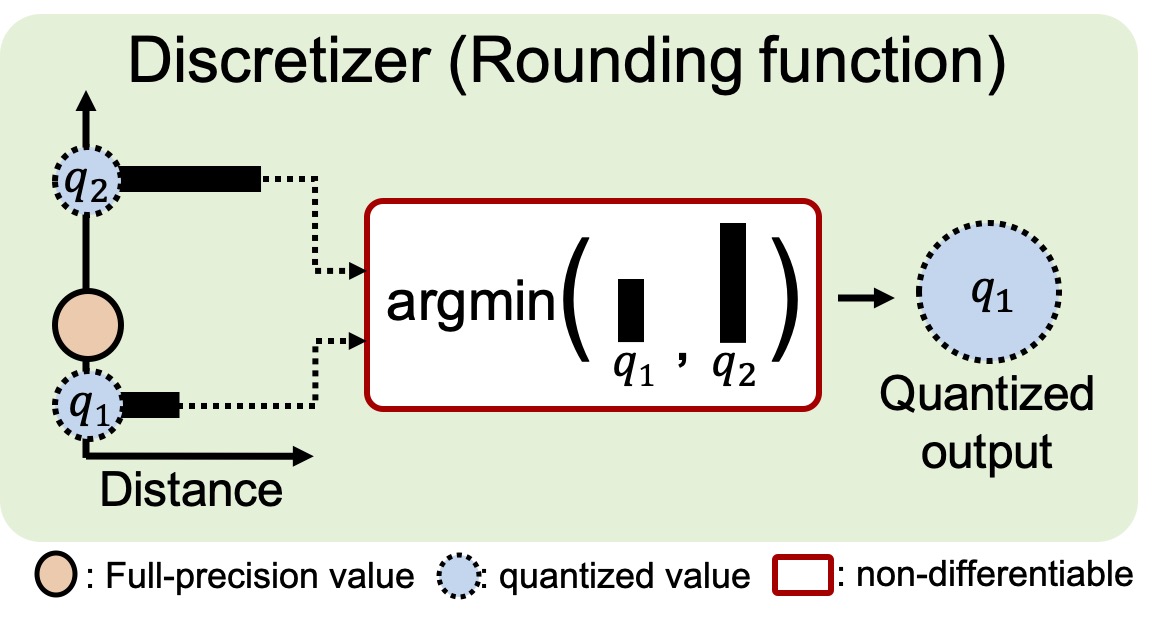

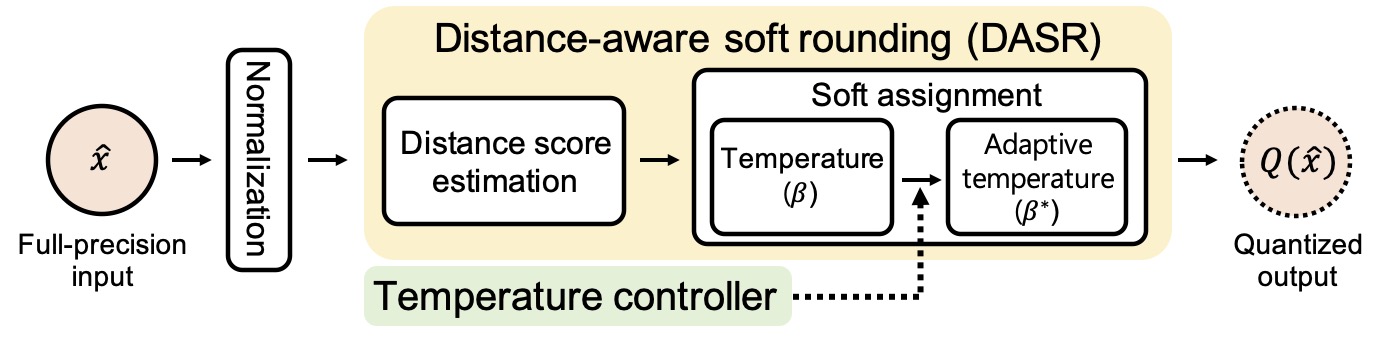

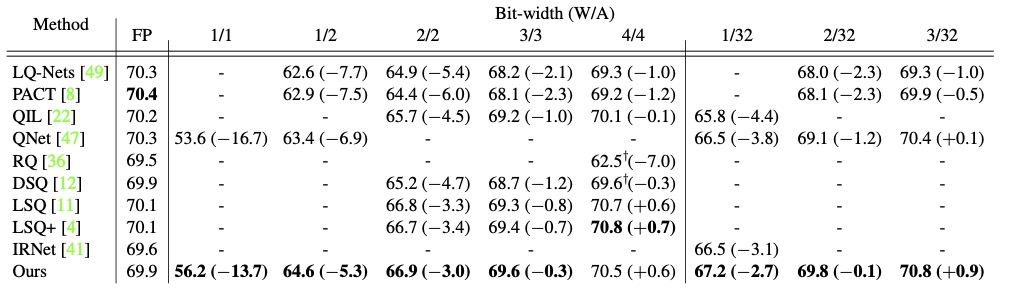

We address the problem of network quantization, that is, reducing bit-widths of weights and/or activations to lighten network architectures. Quantization methods use a rounding function to map full-precision values to the nearest quantized ones, but this operation is not differentiable. There are mainly two approaches to training quantized networks with gradient-based optimizers. First, a straight-through estimator (STE) replaces the zero derivative of the rounding with that of an identity function, which causes a gradient mismatch problem. Second, soft quantizers approximate the rounding with continuous functions at training time, and exploit the rounding for quantization at test time. This alleviates the gradient mismatch, but causes a quantizer gap problem. We alleviate both problems in a unified framework. To this end, we introduce a novel quantizer, dubbed a distance-aware quantizer (DAQ), that mainly consists of a distance-aware soft rounding (DASR) and a temperature controller. To alleviate the gradient mismatch problem, DASR approximates the discrete rounding with the kernel soft argmax, which is based on our insight that the quantization can be formulated as a distance-based assignment problem between full-precision values and quantized ones. The controller adjusts the temperature parameter in DASR adaptively according to the input, addressing the quantizer gap problem. Experimental results on standard benchmarks show that DAQ outperforms the state of the art significantly for various bit-widths without bells and whistles.

Overview of our framework

Experiment

Paper

|

D. Kim, J. Lee, B. Ham Distance-aware Quantization In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV) , 2021 [Paper on arXiv] |

BibTeX

@InProceedings{Kim21,

author = "D. Kim, J. Lee, B. Ham",

title = "Distance-aware Quantization",

booktitle = "ICCV",

year = "2021",

}

Acknowledgements

This research was supported by the Samsung Research Funding & Incubation Center for Future Technology (SRFC-IT1802-06).